Download MuleSoft Certified Integration Architect-Level 1 MAINTENANCE.MCIA-LEVEL-1-MAINTENANCE.VCEplus.2024-11-09.116q.tqb

| Vendor: | Mulesoft |

| Exam Code: | MCIA-LEVEL-1-MAINTENANCE |

| Exam Name: | MuleSoft Certified Integration Architect-Level 1 MAINTENANCE |

| Date: | Nov 09, 2024 |

| File Size: | 4 MB |

How to open TQB files?

Files with TQB (Taurus Question Bank) extension can be opened by Taurus Exam Studio.

Purchase

Coupon: TAURUSSIM_20OFF

Discount: 20%

Demo Questions

Question 1

An organization is designing a mule application to support an all or nothing transaction between serval database operations and some other connectors so that they all roll back if there is a problem with any of the connectors Besides the database connector , what other connector can be used in the transaction.

- VM

- Anypoint MQ

- SFTP

- ObjectStore

Correct answer: A

Explanation:

Correct answer is VM VM support Transactional Type. When an exception occur, The transaction rolls back to its original state for reprocessing. This feature is not supported by other connectors.Here is additional information about Transaction management: Correct answer is VM VM support Transactional Type. When an exception occur, The transaction rolls back to its original state for reprocessing. This feature is not supported by other connectors.

Here is additional information about Transaction management:

Question 2

A mule application uses an HTTP request operation to involve an external API.

The external API follows the HTTP specification for proper status code usage.

What is possible cause when a 3xx status code is returned to the HTTP Request operation from theexternal API?

- The request was not accepted by the external API

- The request was Redirected to a different URL by the external API

- The request was NOT RECEIVED by the external API

- The request was ACCEPTED by the external API

Correct answer: B

Explanation:

3xx HTTP status codes indicate a redirection that the user agent (a web browser or a crawler) needsto take further action when trying to access a particular resource.Reference: https://www.w3.org/Protocols/rfc2616/rfc2616-sec10.html 3xx HTTP status codes indicate a redirection that the user agent (a web browser or a crawler) needsto take further action when trying to access a particular resource.

Reference: https://www.w3.org/Protocols/rfc2616/rfc2616-sec10.html

Question 3

An organization is migrating all its Mule applications to Runtime Fabric (RTF). None of the Mule applications use Mule domain projects.

Currently, all the Mule applications have been manually deployed to a server group among several customer hosted Mule runtimes.

Port conflicts between these Mule application deployments are currently managed by the DevOps team who carefully manage Mule application properties files.

When the Mule applications are migrated from the current customer-hosted server group to Runtime Fabric (RTF), fo the Mule applications need to be rewritten and what DevOps port configuration responsibilities change or stay the same?

- Yes, the Mule applications Must be rewrittenDevOps No Longer needs to manage port conflicts between the Mule applications

- Yes, the Mule applications Must be rewrittenDevOps Must Still Manage port conflicts.

- NO, The Mule applications do NOT need to be rewrittenDevOps MUST STILL manage port conflicts

- NO, the Mule applications do NO need to be rewrittenDevOps NO LONGER needs to manage port conflicts between the Mule applications.

Correct answer: C

Explanation:

Anypoint Runtime Fabric is a container service that automates the deployment and orchestration of your Mule applications and gateways.Runtime Fabric runs on customer-managed infrastructure on AWS, Azure, virtual machines (VMs) or bare-metal servers.As none of the Mule applications use Mule domain projects. applications are not required to be rewritten. Also when applications are deployed on RTF, by default ingress is allowed only on 8081.Hence port conflicts are not required to be managed by DevOps team - Anypoint Runtime Fabric is a container service that automates the deployment and orchestration of your Mule applications and gateways.

- Runtime Fabric runs on customer-managed infrastructure on AWS, Azure, virtual machines (VMs) or bare-metal servers.

- As none of the Mule applications use Mule domain projects. applications are not required to be rewritten. Also when applications are deployed on RTF, by default ingress is allowed only on 8081.

- Hence port conflicts are not required to be managed by DevOps team

Question 4

An organization is evaluating using the CloudHub shared Load Balancer (SLB) vs creating a CloudHub dedicated load balancer (DLB). They are evaluating how this choice affects the various types of certificates used by CloudHub deplpoyed Mule applications, including MuleSoft-provided, customerprovided, or Mule application-provided certificates.

What type of restrictions exist on the types of certificates that can be exposed by the CloudHub Shared Load Balancer (SLB) to external web clients over the public internet?

- Only MuleSoft-provided certificates are exposed.

- Only customer-provided wildcard certificates are exposed.

- Only customer-provided self-signed certificates are exposed.

- Only underlying Mule application certificates are exposed (pass-through)

Correct answer: A

Explanation:

https://docs.mulesoft.com/runtime-manager/dedicated-load-balancer-tutorial https://docs.mulesoft.com/runtime-manager/dedicated-load-balancer-tutorial

Question 5

A Mule application is being designed To receive nightly a CSV file containing millions of records from an external vendor over SFTP, The records from the file need to be validated, transformed. And then written to a database.

Records can be inserted into the database in any order.

In this use case, what combination of Mule components provides the most effective and performant way to write these records to the database?

- Use a Parallel for Each scope to Insert records one by one into the database

- Use a Scatter-Gather to bulk insert records into the database

- Use a Batch job scope to bulk insert records into the database.

- Use a DataWeave map operation and an Async scope to insert records one by one into the database.

Correct answer: C

Explanation:

Correct answer is Use a Batch job scope to bulk insert records into the database * Batch Job is most efficient way to manage millions of records.A few points to note here are as follows :Reliability: If you want reliabilty while processing the records, i.e should the processing survive a runtime crash or other unhappy scenarios, and when restarted process all the remaining records, if yes then go for batch as it uses persistent queues.Error Handling: In Parallel for each an error in a particular route will stop processing the remaining records in that route and in such case you'd need to handle it using on error continue, batch process does not stop during such error instead you can have a step for failures and have a dedicated handling in it.Memory footprint: Since question said that there are millions of records to process, parallel for each will aggregate all the processed records at the end and can possibly cause Out Of Memory.Batch job instead provides a BatchResult in the on complete phase where you can get the count of failures and success. For huge file processing if order is not a concern definitely go ahead with Batch Job Correct answer is Use a Batch job scope to bulk insert records into the database * Batch Job is most efficient way to manage millions of records.

A few points to note here are as follows :

Reliability: If you want reliabilty while processing the records, i.e should the processing survive a runtime crash or other unhappy scenarios, and when restarted process all the remaining records, if yes then go for batch as it uses persistent queues.

Error Handling: In Parallel for each an error in a particular route will stop processing the remaining records in that route and in such case you'd need to handle it using on error continue, batch process does not stop during such error instead you can have a step for failures and have a dedicated handling in it.

Memory footprint: Since question said that there are millions of records to process, parallel for each will aggregate all the processed records at the end and can possibly cause Out Of Memory.

Batch job instead provides a BatchResult in the on complete phase where you can get the count of failures and success. For huge file processing if order is not a concern definitely go ahead with Batch Job

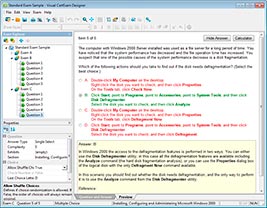

HOW TO OPEN VCE FILES

Use VCE Exam Simulator to open VCE files

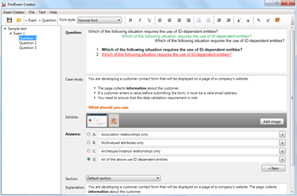

HOW TO OPEN VCEX FILES

Use ProfExam Simulator to open VCEX files

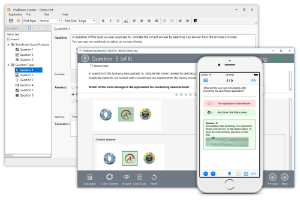

ProfExam at a 20% markdown

You have the opportunity to purchase ProfExam at a 20% reduced price

Get Now!