Download AWS Certified Big Data-Specialty Dumps.AWS-Certified-Big-Data-Specialty.Pass4Success.2026-02-05.119q.tqb

| Vendor: | Amazon |

| Exam Code: | AWS-Certified-Big-Data-Specialty |

| Exam Name: | AWS Certified Big Data-Specialty Dumps |

| Date: | Feb 05, 2026 |

| File Size: | 412 KB |

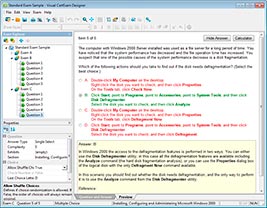

How to open TQB files?

Files with TQB (Taurus Question Bank) extension can be opened by Taurus Exam Studio.

Purchase

Coupon: TAURUSSIM_20OFF

Discount: 20%

Demo Questions

Question 1

An organization is using Amazon Kinesis Data Streams to collect data generated from thousands of temperature devices and is using AWS Lambda to process the data. Devices generate 10 to 12 million records every day, but Lambda is processing only around 450 thousand records. Amazon CloudWatch indicates that throttling on Lambda is not occurring.

What should be done to ensure that all data is processed? (Choose two.)

- Increase the BatchSize value on the EventSource, and increase the memory allocated to the Lambda function.

- Decrease the BatchSize value on the EventSource, and increase the memory allocated to the Lambda function.

- Create multiple Lambda functions that will consume the same Amazon Kinesis stream.

- Increase the number of vCores allocated for the Lambda function.

- Increase the number of shards on the Amazon Kinesis stream.

Correct answer: A, E

Explanation:

https://tech.trivago.com/2018/07/13/aws-kinesis-with-lambdas-lessons-learned/ https://tech.trivago.com/2018/07/13/aws-kinesis-with-lambdas-lessons-learned/

Question 2

An organization is currently using an Amazon EMR long-running cluster with the latest Amazon EMR release for analytic jobs and is storing data as external tables on Amazon S3.

The company needs to launch multiple transient EMR clusters to access the same tables concurrently, but the metadata about the Amazon S3 external tables are defined and stored on the long-running cluster.

Which solution will expose the Hive metastore with the LEAST operational effort?

- Export Hive metastore information to Amazon DynamoDB hive-site classification to point to the Amazon DynamoDB table.

- Export Hive metastore information to a MySQL table on Amazon RDS and configure the Amazon EMR hive-site classification to point to the Amazon RDS database.

- Launch an Amazon EC2 instance, install and configure Apache Derby, and export the Hive metastore information to derby.

- Create and configure an AWS Glue Data Catalog as a Hive metastore for Amazon EMR.

Correct answer: B

Question 3

What does the "Server Side Encryption" option on Amazon S3 provide?

- It provides an encrypted virtual disk in the Cloud.

- It doesn't exist for Amazon S3, but only for Amazon EC2.

- It encrypts the files that you send to Amazon S3, on the server side.

- It allows to upload files using an SSL endpoint, for a secure transfer.

Correct answer: A

Question 4

Can I attach more than one policy to a particular entity?

- Yes always

- Only if within GovCloud

- No

- Only if within VPC

Correct answer: A

Question 5

When will you incur costs with an Elastic IP address (EIP)?

- When an EIP is allocated

- When it is allocated and associated with a running instance

- When it is allocated and associated with a stopped instance

- Costs are incurred regardless of whether the EIP associated with a running instance

Correct answer: C

Question 6

An online retailer is using Amazon DynamoDB to store data relate to customer transactions. The items in the table contain several string attributes describing the transaction as well as a JSON attribute containing the shopping cart and other details corresponding to the transactions. Average item size is ~250KB, most of which is associated with the JSON attribute. The average generates ~3GB of data per month.

Customers access the table to display their transaction history and review transaction details as needed. Ninety percent of queries against the table are executed when building the transaction history view, with the other 10% retrieving transaction details. The table is partitioned on CustomerID and sorted on transaction data.

The client has very high read capacity provisioned for the table and experiences very even utilization, but complains about the cost of Amazon DynamoDB compared to other NoSQL solutions.

Which strategy will reduce the cost associated with the client's read queries while not degrading quality?

- Modify all database calls to use eventually consistent reads and advise customers that transaction history may be one second out-of-date.

- Change the primary table to partition on TransactionID, create a GSI partitioned on customer and sorted on date, project small attributes into GSI and then query GSI for summary data and the primary table for JSON details.

- Vertically partition the table, store base attributes on the primary table and create a foreign key reference to a secondary table containing the JSON data. Query the primary table for summary data and the secondary table for JSON details.

- Create an LSI sorted on date project the JSON attribute into the index and then query the primary table for summary data and the LSI for JSON details

Correct answer: C

Question 7

A photo sharing service stores pictures in Amazon Simple Storage Service (S3) and allows application signin using an Open ID Connect compatible identity provider. Which AWS Security Token approach to temporary access should you use for the Amazon S3 operations?

- SAML-based identity Federation

- Cross-Account Access

- AWS identity and Access Management roles

- Web identity Federation

Correct answer: A

Question 8

An Amazon EMR cluster using EMRFS has access to Megabytes of data on Amazon S3, originating from multiple unique data sources. The customer needs to query common fields across some of the data sets to be able to perform interactive joins and then display results quickly.

Which technology is most appropriate to enable this capability?

- Presto

- MicroStrategy

- Pig

- R Studio

Correct answer: A

Explanation:

https://dzone.com/articles/getting-introduced-with-presto https://dzone.com/articles/getting-introduced-with-presto

Question 9

You have an application running on an Amazon Elastic Compute Cloud instance, that uploads 5 GB video objects to Amazon Simple Storage Service (S3). Video uploads are taking longer than expected, resulting in poor application performance. Which method will help improve performance of your application?

- Enable enhanced networking

- Use Amazon S3 multipart upload

- Leveraging Amazon CloudFront, use the HTTP POST method to reduce latency.

- Use Amazon Elastic Block Store Provisioned IOPs and use an Amazon EBS-optimized instance

Correct answer: B

Question 10

When you put objects in Amazon 53, what is the indication that an object was successfully stored?

- A HTTP 200 result code and MD5 checksum, taken together, indicate that the operation was successful

- A success code is inserted into the S3 object metadata

- Amazon S3 is engineered for 99.999999999% durability. Therefore there is no need to confirm that data was inserted.

- Each S3 account has a special bucket named_ s3_logs. Success codes are written to this bucketwith a timestamp and checksum

Correct answer: A

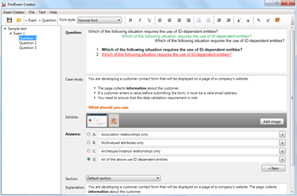

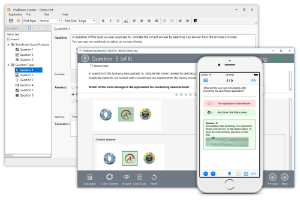

HOW TO OPEN VCE FILES

Use VCE Exam Simulator to open VCE files

HOW TO OPEN VCEX FILES

Use ProfExam Simulator to open VCEX files

ProfExam at a 20% markdown

You have the opportunity to purchase ProfExam at a 20% reduced price

Get Now!